In week 6 I learned about multivariable linear regression.

The concept

Multivariable linear regression is similar to what I did last week, the basic linear regression, the only different is in this case it has multiple independent variables that will affect the output, hence there comes out a maths function to calculate the coefficients of a linear equation that describes the destribution of the data (with different independent variables).

Why we doing this

To let the machine gain the ability to make accurate predictions, it needs to learn from accurate data. However, most data have various independent variables that will affect the output, hence multivariable linear regression is needed to find out the linear relationship between various inputs and the output, so then with different inputs the machine can give highly accurate response.

How I went

- I successfully run the code provided in the Google Docs with the provided data

- When I was trying to implement my multivariable linear regression with my data, I found some bugs, so I fixed them.

- ie. the length of the

Xwas limited to47when it comes to compute cost or to plot, this leads to a disaster when there are more or less than 47 entrieds of data provided. - While sorting data, it is essential to drop all

nanin the dataframe otherwise when it comes to calculation errors will occur.

- ie. the length of the

What I did

I did a PM2.5 and PM2.5/hr vs AQI_PM2.5 linear regression analyse. I collected the data from ACT Open Data Portal and I implemented it as following:

1 | import numpy as np |

I did 1000 epoches on 1000 data with a rate of 0.01 otherwise it will take too long for my laptop to process.

Results

My coefficients:

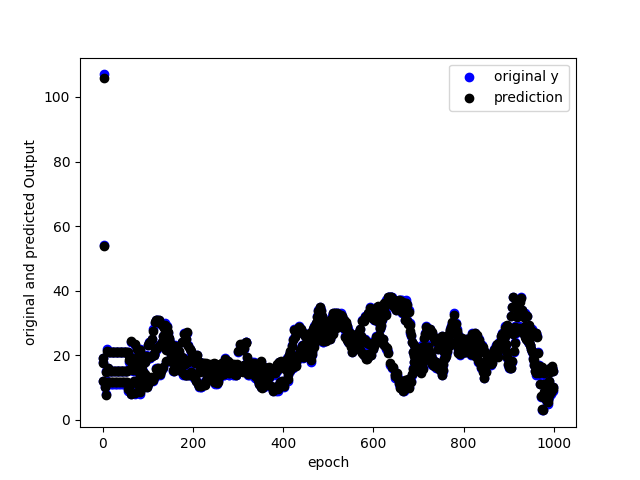

[-0.10525957 3.93943139 -0.00469064]Original vs Prediction:

As it shows it is really accurate as most the predictions overlap the original values.

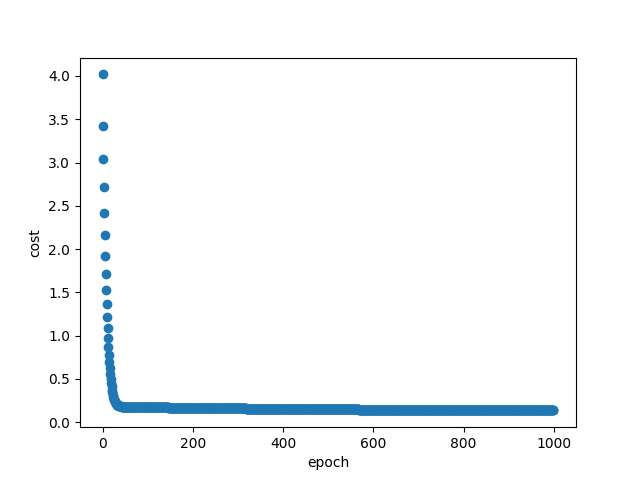

Cost of the linear regression:

Again the cost supports that the confidence of the calculated coefficients, as they are really accurate.

Conclusion

Everything went well this week, I learned some maths and I leanred how to implement linear regression. And I successfully analysed the distribution of the data I provided.