In Term 4 Week 3, I was busy on preparing for English presentation. However, I also followed up my plan, that is to finish my documentation of the project. Meanwhile, I also published a release for my C# high performance repository.

Progress

School work

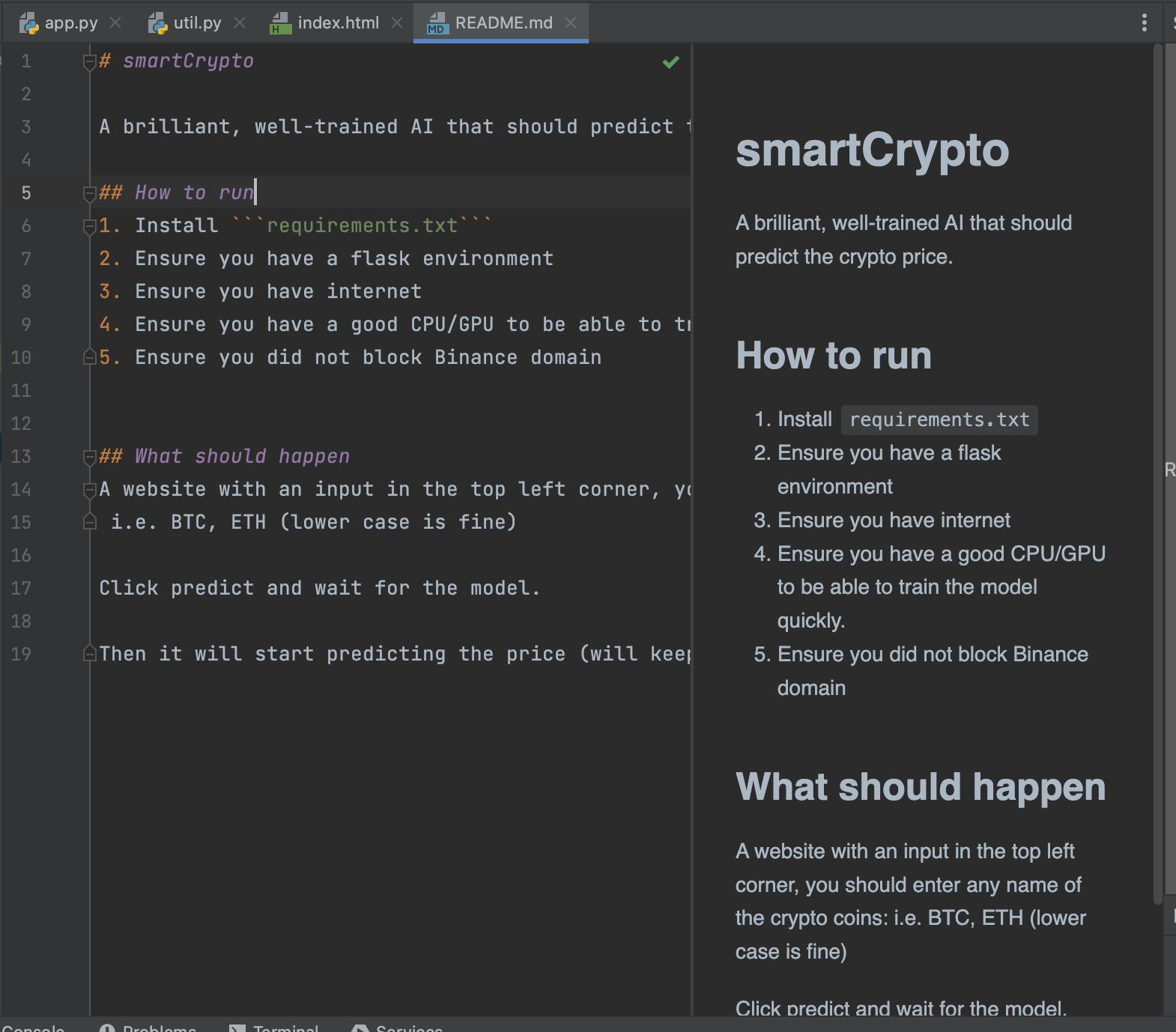

I finished my User Documentation. I explained how to run my project in the README file, and I also mentioned the expected output.

C# Project

I release Nino.Serialization v1.0.19.2, it optimized the performance of Serialization and Deserialization.

I used the following method to optimize the performance:

Use Generic types to prevent boxing/unboxing, also provide a low-level unbox method.

As boxing and unboxing a value type is extremly expensive, I decided to use generic method to prevent boxing/unboxing

For example, I implemented this approach to serialize enum:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71/// <summary>

/// Compress and write enum

/// </summary>

/// <param name="val"></param>

[]

public unsafe void CompressAndWriteEnum<T>([In] T val)

{

var type = typeof(T);

if (type == ConstMgr.ObjectType)

{

type = val.GetType();

switch (TypeModel.GetTypeCode(type))

{

case TypeCode.Byte:

buffer[position++] = Unsafe.Unbox<byte>(val);

return;

case TypeCode.SByte:

buffer[position++] = *(byte*)Unsafe.Unbox<sbyte>(val);

return;

case TypeCode.Int16:

Unsafe.As<byte, short>(ref buffer.AsSpan(position, 2).GetPinnableReference()) =

Unsafe.Unbox<short>(val);

position += 2;

return;

case TypeCode.UInt16:

Unsafe.As<byte, ushort>(ref buffer.AsSpan(position, 2).GetPinnableReference()) =

Unsafe.Unbox<ushort>(val);

position += 2;

return;

case TypeCode.Int32:

CompressAndWrite(ref Unsafe.Unbox<int>(val));

return;

case TypeCode.UInt32:

CompressAndWrite(ref Unsafe.Unbox<uint>(val));

return;

case TypeCode.Int64:

CompressAndWrite(ref Unsafe.Unbox<long>(val));

return;

case TypeCode.UInt64:

CompressAndWrite(ref Unsafe.Unbox<ulong>(val));

return;

}

return;

}

switch (TypeModel.GetTypeCode(type))

{

case TypeCode.Byte:

case TypeCode.SByte:

Unsafe.WriteUnaligned(buffer.Data + position++, val);

return;

case TypeCode.Int16:

case TypeCode.UInt16:

Unsafe.WriteUnaligned(ref buffer.AsSpan(position, 2).GetPinnableReference(), val);

position += 2;

return;

case TypeCode.Int32:

CompressAndWrite(ref Unsafe.As<T, int>(ref val));

return;

case TypeCode.UInt32:

CompressAndWrite(ref Unsafe.As<T, uint>(ref val));

return;

case TypeCode.Int64:

CompressAndWrite(ref Unsafe.As<T, long>(ref val));

return;

case TypeCode.UInt64:

CompressAndWrite(ref Unsafe.As<T, ulong>(ref val));

return;

}

}I used lots of Unsafe methods to access memory directly. As shown above, I assigned values to memory blocks directly (by changing memory blocks to span first, then assign value to that block). Which significantly enhances the performance, as instead of copy the value type to that memory block, it directly assigns value on it. I also implemented this in Deserialization:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38/// <summary>

/// Compress and write enum

/// </summary>

/// <param name="val"></param>

[]

public void DecompressAndReadEnum<T>(ref T val)

{

if (EndOfReader) return;

switch (TypeModel.GetTypeCode(typeof(T)))

{

case TypeCode.Byte:

Unsafe.As<T, byte>(ref val) = ReadByte();

return;

case TypeCode.SByte:

Unsafe.As<T, sbyte>(ref val) = ReadSByte();

return;

case TypeCode.Int16:

Unsafe.As<T, short>(ref val) = ReadInt16();

return;

case TypeCode.UInt16:

Unsafe.As<T, ushort>(ref val) = ReadUInt16();

return;

//need to consider compress

case TypeCode.Int32:

Unsafe.As<T, int>(ref val) = DecompressAndReadNumber<int>();

return;

case TypeCode.UInt32:

Unsafe.As<T, uint>(ref val) = DecompressAndReadNumber<uint>();

return;

case TypeCode.Int64:

Unsafe.As<T, long>(ref val) = DecompressAndReadNumber<long>();

return;

case TypeCode.UInt64:

Unsafe.As<T, ulong>(ref val) = DecompressAndReadNumber<ulong>();

return;

}

}Instead of read the number and store it to a local temp variable, then copy its value to the member, it direclty reads the number from a buffer and assign it to the memory of the member, which reduces a few operations (copy, mov, etc)

I used c# compiler’s

[In]attribute to tell the compiler to compile specific methods withinkeyword on the function variables. This reduces valuetype copy when calling this method, as we know copy a valuetype is expensive:1

2

3

4

5

6

7

8

9/// <summary>

/// Write int val to binary writer

/// </summary>

/// <param name="num"></param>

[]

public void Write([In] int num)

{

Write(ref num, ConstMgr.SizeOfInt);

}In this case, we when we call

Write, there will be no copies of the original value, it will pass the reference of the original value to another method, then will be written into the buffer. This process enhances a lot of the performance, as it reduces the assignment of a local temp variable, and reduces the copy operation of the original value.

Overall, with some other optimizations, the performance of this repo increased around 30%. With the same test, serialization takes 140ms instead of 180ms from before, similar with deserialization.

Challenges

There were no challenges this week, besides making an English presentation is a pain.

Reflection

This week I’ve done a lot of coding even though I was busy. However, I did not do much school work, so I think this is not good enough and I need to improve it in the next week.

Timeline

This week I kinda followed up with my timeline. I finished my User Documentation, so next week I will aim to finish my presentation slides.